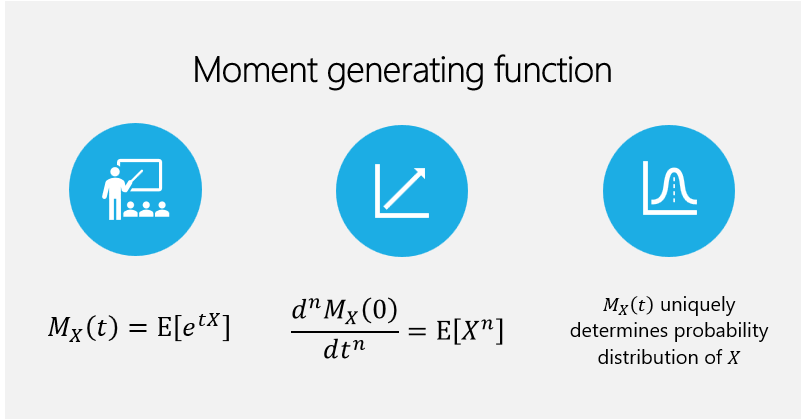

The moment generating function (mgf) is a function often used to characterize the distribution of a random variable.

Table of contents

The moment generating function has great practical relevance because:

it can be used to easily derive moments; its derivatives at zero are equal to the moments of the random variable;

a probability distribution is uniquely determined by its mgf.

Fact 2, coupled with the analytical tractability of mgfs, makes them a handy tool for solving several problems, such as deriving the distribution of a sum of two or more random variables.

The following is a formal definition.

Definition

Let

be a random variable. If the expected value

exists and is finite for all real numbers

belonging to a closed interval

,

with

,

then we say that

possesses a moment generating function and the

function

is

called the moment generating function of

.

Not all random variables possess a moment generating function. However, all random variables possess a characteristic function, another transform that enjoys properties similar to those enjoyed by the mgf.

The next example shows how the mgf of an exponential random variable is calculated.

Example

Let

be a continuous random variable with

support

and

probability density

function

where

is a strictly positive number. The expected value

can be computed as

follows:

![[eq7]](/images/moment-generating-function__14.png) Furthermore,

the above expected value exists and is finite for any

Furthermore,

the above expected value exists and is finite for any

,

provided

.

As a consequence,

possesses a

mgf:

The moment generating function takes its name by the fact that it can be used

to derive the moments of

,

as stated in the following proposition.

Proposition

If a random variable

possesses a mgf

,

then the

-th

moment of

,

denoted by

,

exists and is finite for any

.

Furthermore,

where

is the

-th

derivative of

with respect to

,

evaluated at the point

.

Proving the above proposition is quite

complicated, because a lot of analytical details must be taken care of (see

e.g. Pfeiffer - 2012). The intuition, however, is

straightforward. Since the expected value is a linear operator and

differentiation is a linear operation, under appropriate conditions we can

differentiate through the expected

value:![[eq16]](/images/moment-generating-function__32.png) Making

the substitution

Making

the substitution

,

we

obtain

![[eq17]](/images/moment-generating-function__34.png)

The next example shows how this proposition can be applied.

Example

In the previous example we have demonstrated that the mgf of an exponential

random variable

isThe

expected value of

can be computed by taking the first derivative of the

mgf:

and

evaluating it at

:

The

second moment of

can be computed by taking the second derivative of the

mgf:

and

evaluating it at

:

![[eq22]](/images/moment-generating-function__43.png) And

so on for higher moments.

And

so on for higher moments.

The most important property of the mgf is the following.

Proposition

Let

and

be two random variables. Denote by

and

their distribution

functions and by

and

their mgfs.

and

have the same distribution (i.e.,

for any

)

if and only if they have the same mgfs (i.e.,

for any

).

For a fully general proof of this

proposition see, for example, Feller (2008). We just

give an informal proof for the special case in which

and

are discrete random variables taking only finitely many values. The "only if"

part is trivial. If

and

have the same distribution,

then

![]() The

"if" part is proved as follows. Denote by

The

"if" part is proved as follows. Denote by

and

the supports of

and

and by

and

their probability mass

functions. Denote by

the union of the two

supports:

and

by

the elements of

.

The mgf of

can be written

as

![[eq34]](/images/moment-generating-function__72.png) By

the same token, the mgf of

By

the same token, the mgf of

can be written

as:

If

and

have the same mgf, then for any

belonging to a closed neighborhood of

zero

and

Rearranging

terms, we

obtain

This

can be true for any

belonging to a closed neighborhood of zero only

if

for

every

.

It follows that that the probability mass functions of

and

are equal. As a consequence, also their distribution functions are equal.

This proposition is extremely important and relevant from a practical viewpoint: in many cases where we need to prove that two distributions are equal, it is much easier to prove equality of the moment generating functions than to prove equality of the distribution functions.

Also note that equality of the distribution functions can be replaced in the proposition above by:

equality of the probability mass functions (if

and

are discrete random

variables);

equality of the probability density functions (if

and

are continuous

random variables).

The following sections contain more details about the mgf.

Let

be a random variable possessing a mgf

.

Definewhere

are two constants and

.

Then, the random variable

possesses a mgf

and

By the very definition of mgf, we

haveObviously,

if

is defined on a closed interval

,

then

is defined on the interval

.

Let

,

...,

be

mutually independent random variables.

Let

be their

sum:

Then, the mgf of

is the product of the mgfs of

,

...,

:

This is easily proved by using the

definition of mgf and the properties of mutually independent

variables:![[eq51]](/images/moment-generating-function__112.png)

The multivariate generalization of the mgf is discussed in the lecture on the joint moment generating function.

Some solved exercises on moment generating functions can be found below.

Let

be a discrete random variable having a

Bernoulli distribution.

Its support

is

and

its probability mass

function

is

where

is a constant.

Derive the moment generating function of

,

if it exists.

By the definition of moment generating

function, we

have![[eq56]](/images/moment-generating-function__120.png) Obviously,

the moment generating function exists and it is well-defined because the above

expected value exists for any

Obviously,

the moment generating function exists and it is well-defined because the above

expected value exists for any

.

Let

be a random variable with moment generating

function

Derive the variance of

.

We can use the following formula for

computing the

variance:The

expected value of

is computed by taking the first derivative of the moment generating

function:

and

evaluating it at

:

The

second moment of

is computed by taking the second derivative of the moment generating

function:

and

evaluating it at

:

Therefore,

A random variable

is said to have a Chi-square distribution

with

degrees of freedom if its moment generating function is defined for any

and it is equal

to

Define

where

and

are two independent random variables having Chi-square distributions with

and

degrees of freedom respectively.

Prove that

has a Chi-square distribution with

degrees of freedom.

The moment generating functions of

and

are

The

moment generating function of a sum of independent random variables is just

the product of their moment generating

functions:

Therefore,

is the moment generating function of a Chi-square random variable with

degrees of freedom. As a consequence,

has a Chi-square distribution with

degrees of freedom.

Feller, W. (2008) An introduction to probability theory and its applications, Volume 2, Wiley.

Pfeiffer, P. E. (1978) Concepts of probability theory, Dover Publications.

Please cite as:

Taboga, Marco (2021). "Moment generating function", Lectures on probability theory and mathematical statistics. Kindle Direct Publishing. Online appendix. https://www.statlect.com/fundamentals-of-probability/moment-generating-function.

Most of the learning materials found on this website are now available in a traditional textbook format.