The joint probability mass function is a function that completely characterizes the distribution of a discrete random vector. When evaluated at a given point, it gives the probability that the realization of the random vector will be equal to that point.

The term joint probability function is often used as a synonym. Sometimes, the abbreviation joint pmf is used.

The following is a formal definition.

Definition

Let

be a

discrete random vector. Its joint probability mass function is a function

such

that

![]() where

where

is the probability

that the random vector

takes the value

.

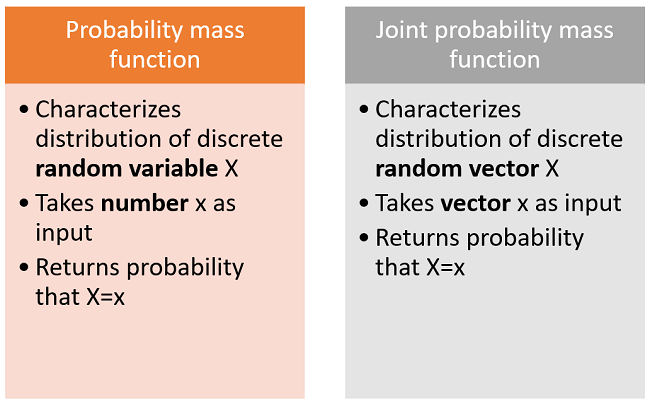

This is a straightforward multi-variate generalization of the definition of the probability mass function of a discrete variable (uni-variate case).

Suppose

is a

discrete random vector and that its

support (the set of

values it can take)

is:

![[eq4]](/images/joint-probability-mass-function__10.png)

If the three values have the same probability, then the joint probability mass

function

is:![[eq5]](/images/joint-probability-mass-function__11.png)

When the two components of

are denoted by

and

,

the joint pmf can also be written using the following alternative

notation:

![[eq6]](/images/joint-probability-mass-function__15.png)

The joint pmf can be used to derive the marginal probability mass functions of the single entries of the random vector.

Given

,

the marginal of

is

In order to get the entire marginal, we need to compute

separately for each

belonging to the support of

.

Each of the computations involves a sum over all the possible values of

(i.e., over the support

).

Similarly, the marginal of

is

![[eq10]](/images/joint-probability-mass-function__25.png)

Let us derive the marginal pmf of

from the joint pmf in the previous example.

The supports of

and

are

We

have![[eq12]](/images/joint-probability-mass-function__30.png) and

and![[eq13]](/images/joint-probability-mass-function__31.png)

Thus, the marginal probability mass function of

is

![[eq14]](/images/joint-probability-mass-function__33.png)

If a random vector has two entries

and

,

then its joint pmf can be written in tabular form:

each row corresponds to one of the possible values of

;

each column corresponds to one of the possible values of

;

each cell contains the probability of a couple of values

.

In the next example it will become clear why the tabular form is very convenient.

Let us put in tabular form the joint pmf used in the previous examples.

| x2=0 | x2=1 | Marginal of X1 | |

|---|---|---|---|

| x1=0 | 0 | 1/3 | 1/3 |

| x1=1 | 1/3 | 1/3 | 2/3 |

| Marginal of X2 | 1/3 | 2/3 |

We can easily obtain the marginals by summing the probabilities by column and by row.

The joint pmf can also be used to derive the conditional probability mass function of the single entries of the random vector.

This is carefully explained and illustrated with examples in the glossary entry on conditional pmfs.

For a thorough discussion of joint pmfs, go to the lecture entitled Random vectors, where discrete random vectors are introduced and you can also find some solved exercises involving joint pmfs.

Previous entry: Joint probability density function

Next entry: Log likelihood

Please cite as:

Taboga, Marco (2021). "Joint probability mass function", Lectures on probability theory and mathematical statistics. Kindle Direct Publishing. Online appendix. https://www.statlect.com/glossary/joint-probability-mass-function.

Most of the learning materials found on this website are now available in a traditional textbook format.