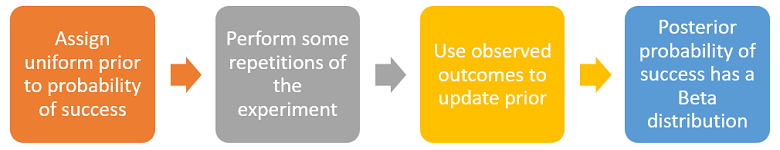

The Beta distribution is a continuous probability distribution often used to model the uncertainty about the probability of success of an experiment.

Table of contents

The Beta distribution can be used to analyze probabilistic experiments that have only two possible outcomes:

success, with probability

;

failure, with probability

.

These experiments are called Bernoulli experiments.

Suppose that

is unknown and all its possible values are deemed equally likely.

This uncertainty can be described by assigning to

a uniform distribution on the interval

.

This is appropriate because:

,

being a probability, can take only values between

and

;

the uniform distribution assigns equal probability density to all points in

the interval, which reflects the fact that no possible value of

is, a priori, deemed more likely than all the others.

Now, suppose that:

we perform

independent repetitions of the experiment;

we observe

successes and

failures.

After performing the experiments, we want to know how we should revise the

distribution initially assigned to

,

in order to properly take into account the information provided by the

observed outcomes.

In other words, we want to calculate the conditional

distribution of

(also called posterior distribution), conditional on the number of successes

and failures we have observed.

The result of this calculation is a Beta distribution. In particular, the

conditional distribution of

,

conditional on having observed

successes out of

trials, is a Beta distribution with parameters

and

.

The Beta distribution is characterized as follows.

Definition

Let

be a continuous

random variable. Let its

support be the unit

interval:

Let

.

We say that

has a Beta distribution with shape parameters

and

if and only if its

probability density

function

is

where

is the Beta function.

A random variable having a Beta distribution is also called a Beta random variable.

The following is a proof that

is a legitimate probability density function.

Non-negativity descends from the facts that

is non-negative when

and

,

and that

is strictly positive (it is a ratio of Gamma functions, which are strictly

positive when their arguments are strictly positive - see the lecture entitled

Gamma function). That the integral of

over

equals

is proved as

follows:

where

we have used the integral representation

a

proof of which can be found in the lecture entitled

Beta

function.

The expected value of a Beta random variable

is

It

can be derived as

follows:![[eq13]](/images/beta-distribution__40.png)

The variance of a Beta random variable

is

It

can be derived thanks to the usual

variance formula

():

![[eq16]](/images/beta-distribution__44.png)

The

-th

moment of a Beta random variable

is

By

the definition of moment, we

have![[eq18]](/images/beta-distribution__48.png)

where in step

we have used recursively the fact that

.

The moment generating function of a Beta

random variable

is defined for any

and it

is

![[eq20]](/images/beta-distribution__53.png)

By

using the definition of moment generating function, we

obtain![[eq21]](/images/beta-distribution__54.png) Note

that the moment generating function exists and is well defined for any

Note

that the moment generating function exists and is well defined for any

because the

integral

is

guaranteed to exist and be finite, since the

integrand

is

continuous in

over the bounded interval

.

The above formula for the moment generating function might seem impractical to compute because it involves an infinite sum as well as products whose number of terms increase indefinitely.

However, the

functionis

a function, called

Confluent

hypergeometric function of the first kind, that has been extensively

studied in many branches of mathematics. Its properties are well-known and

efficient algorithms for its computation are available in most software

packages for scientific computation.

The characteristic function of a Beta random

variable

is

![[eq25]](/images/beta-distribution__62.png)

The

derivation of the characteristic function is almost identical to the

derivation of the moment generating function (just replace

with

in that proof).

Comments made about the moment generating function, including those about the

computation of the Confluent hypergeometric function, apply also to the

characteristic function, which is identical to the mgf except for the fact

that

is replaced with

.

The distribution function of a Beta random variable

is

where

the

function

is

called incomplete Beta function

and is usually computed by means of specialized computer algorithms.

For

,

,

because

cannot be smaller than

.

For

,

because

is always smaller than or equal to

.

For

,

In the following subsections you can find more details about the Beta distribution.

The following proposition states the relation between the Beta and the uniform distributions.

Proposition

A Beta distribution with parameters

and

is a uniform distribution on the interval

.

When

and

,

we have that

![[eq32]](/images/beta-distribution__85.png) Therefore,

the probability density function of a Beta distribution with parameters

Therefore,

the probability density function of a Beta distribution with parameters

and

can be written as

But

the latter is the probability density function of a uniform distribution on

the interval

.

The following proposition states the relation between the Beta and the binomial distributions.

Proposition

Suppose

is a random variable having a Beta distribution with parameters

and

.

Let

be another random variable such that its distribution conditional on

is a binomial distribution with parameters

and

.

Then, the conditional distribution of

given

is a Beta distribution with parameters

and

.

We are dealing with one continuous random

variable

and one discrete random variable

(together, they form what is called a random vector with mixed coordinates).

With a slight abuse of notation, we will proceed as if also

were continuous, treating its probability mass function as if it were a

probability density function. Rest assured that this can be made fully

rigorous (by defining a probability density function with respect to a

counting measure

on the support of

).

By assumption

has a binomial distribution conditional on

,

so that its

conditional

probability mass function is

where

is a binomial coefficient.

Also, by assumption

has a Beta distribution, so that is probability density function

is

Therefore,

the joint

probability density function of

and

is

![[eq36]](/images/beta-distribution__113.png) Thus,

we have factored the joint probability density function

as

Thus,

we have factored the joint probability density function

aswhere

is

the probability density function of a Beta distribution with parameters

and

,

and the function

does not depend on

.

By a result proved in the lecture entitled

Factorization of joint probability density

functions, this implies that the probability density function of

given

is

Thus,

as we wanted to demonstrate, the conditional distribution of

given

is a Beta distribution with parameters

and

.

By combining this proposition and the previous one, we obtain the following corollary.

Proposition

Suppose that

is a random variable having a uniform distribution. Let

be another random variable such that its distribution conditional on

is a binomial distribution with parameters

and

.

Then, the conditional distribution of

given

is a Beta distribution with parameters

and

.

This proposition constitutes a formal statement of what we said in the introduction of this lecture in order to motivate the Beta distribution.

Remember that the number of successes obtained in

independent repetitions of a random experiment having probability of success

is a binomial random variable with parameters

and

.

According to the proposition above, when the probability of success

is a priori unknown and all possible values of

are deemed equally likely (they have a uniform distribution), observing the

outcome of the

experiments leads us to revise the distribution assigned to

,

and the result of this revision is a Beta distribution.

Below you can find some exercises with explained solutions.

A production plant produces items that have a probability

of being defective.

The plant manager does not know

,

but from past experience she expects this probability to be equal to

.

Furthermore, she quantifies her uncertainty about

by attaching a standard

deviation of

to her

estimate.

After consulting with an expert in statistics, the manager decides to use a

Beta distribution to model her uncertainty about

.

How should she set the two parameters of the distribution in order to match

her priors about the expected value and the standard deviation of

?

We know that the expected value of a Beta

random variable with parameters

and

is

while

its variance

is

The

two parameters need to be set in such a way

that

This

is accomplished by finding a solution to the following system of two equations

in two

unknowns:

where

for notational convenience we have set

and

.

The first equation

gives

or

By

substituting this into the second equation, we

get

or

Then

we divide the numerator and denominator on the left-hand side by

:

By

computing the products, we

get

By

taking the reciprocals of both sides, we

have

By

multiplying both sides by

,

we

obtain

Thus

the value of

is

and

the value of

is

By

plugging our numerical values into the two formulae, we

obtain

After choosing the parameters of the Beta distribution so as to represent her priors about the probability of producing a defective item (see previous exercise), the plant manager now wants to update her priors by observing new data.

She decides to inspect a production lot of 100 items, and she finds that 3 of the items in the lot are defective.

How should she change the parameters of the Beta distribution in order to take this new information into account?

Under the hypothesis that the items are

produced independently of each other, the result of the inspection is a

binomial random variable with parameters

and

.

But updating a Beta distribution based on the outcome of a binomial random

variable gives as a result another Beta distribution. Moreover, the two

parameters

and

of the updated Beta distribution

are

After updating the parameters of the Beta distribution (see previous exercise), the plant manager wants to compute again the expected value and the standard deviation of the probability of finding a defective item.

Can you help her?

We just need to use the formulae for the

expected value and the variance of a Beta

distribution:and

plug in the new values we have found for

and

,

that

is,

The

result

is

Please cite as:

Taboga, Marco (2021). "Beta distribution", Lectures on probability theory and mathematical statistics. Kindle Direct Publishing. Online appendix. https://www.statlect.com/probability-distributions/beta-distribution.

Most of the learning materials found on this website are now available in a traditional textbook format.