Linear correlation is a measure of dependence between two random variables.

It has the following characteristics:

it ranges between -1 and 1;

it is proportional to covariance;

its interpretation is very similar to that of covariance (see here).

Let

and

be two random variables.

The linear correlation coefficient (or Pearson's correlation

coefficient) between

and

is

where:

is the covariance between

and

;

and

are the standard deviations of

and

.

The linear correlation coefficient is well-defined only as long as

,

and

exist and are well-defined.

It is often denoted by

.

In principle, the ratio is well-defined only if

and

are strictly greater than zero.

However, it is often assumed that

when one of the two standard deviations is zero.

This is equivalent to assuming that

because

when one of the two standard deviations is zero.

The interpretation is similar to the interpretation of covariance: the

correlation between

and

provides a measure of how similar their deviations from the respective means

are (see the lecture on Covariance for a detailed

explanation).

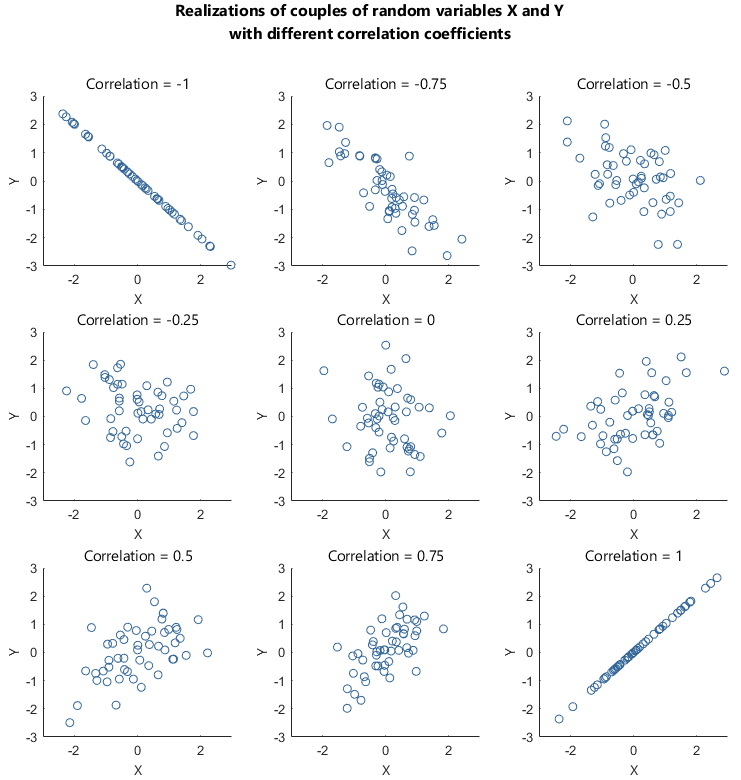

Linear correlation ranges between

and

:

Thanks to this property, correlation allows us to easily understand the intensity of the linear dependence between two random variables:

the closer correlation is to

,

the stronger the positive linear dependence between

and

is;

the closer it is to

,

the stronger the negative linear dependence between

and

is.

The following terminology is often used:

If

then

and

are said to be positively linearly correlated (or simply

positively correlated).

If

then

and

are said to be negatively linearly correlated (or simply

negatively correlated).

If

then

and

are said to be linearly correlated (or simply

correlated).

If

then

and

are said to be uncorrelated. Also note that

.

Therefore, two random variables

and

are uncorrelated whenever

.

In this example we show how to compute the coefficient of linear correlation between two discrete random variables.

Let

be a

-dimensional

random vector and denote its entries by

and

.

Let the support of

be

and

its joint probability

mass function

be

The support of

is

and

its probability mass

function

is

The expected value of

is

The expected value of

is

The variance of

is

The standard deviation of

is

The support of

is:

and

its probability mass function

is

The expected value of

is

The expected value of

is

The variance of

is

The standard deviation of

is

Using the transformation

theorem, we can compute the expected value of

:

![[eq34]](/images/linear-correlation__80.png)

Hence, the covariance between

and

is

![]() and

the linear correlation coefficient

is

and

the linear correlation coefficient

is![[eq36]](/images/linear-correlation__84.png)

The following sections contain more details about the linear correlation coefficient.

Let

be a random variable,

then

This is proved as

follows:where

we have used the fact

that

The linear correlation coefficient is

symmetric:

This is proved as

follows:where

we have used the fact that covariance is

symmetric:

Below you can find some exercises with explained solutions.

Let

be a

discrete random vector and denote its components by

and

.

Let the support of

be

and

its joint probability mass function

be

Compute the coefficient of linear correlation between

and

.

The support of

is

and

its marginal

probability mass function

is

The

expected value of

is

The

expected value of

is

The

variance of

is

The

standard deviation of

is

The

support of

is

and

its marginal probability mass function

is

The

expected value of

is

The

expected value of

is

The

variance of

is

The

standard deviation of

is

Using

the transformation theorem, we can compute the expected value of

:

Hence,

the covariance between

and

is

and

the coefficient of linear correlation between

and

is

Let

be a

discrete random vector and denote its entries by

and

.

Let the support of

be

and

its joint probability mass function

be

Compute the coefficient of linear correlation between

and

.

The support of

is

and

its marginal probability mass function

is

The

mean of

is

The

expected value of

is

The

variance of

is

The

standard deviation of

is

The

support of

is

and

its probability mass function

is

The

mean of

is

The

expected value of

is

The

variance of

is

The

standard deviation of

is

The

expected value of the product

can

be derived using the transformation

theorem

![[eq74]](/images/linear-correlation__163.png) Therefore,

putting pieces together, the covariance between

Therefore,

putting pieces together, the covariance between

and

is

and

the coefficient of linear correlation between

and

is

Let

be a continuous

random vector with support

and

let its joint probability density function

be

![[eq79]](/images/linear-correlation__172.png)

Compute the coefficient of linear correlation between

and

.

The support of

is

When

,

the marginal

probability density function of

is

,

while, when

,

the marginal probability density function of

can be obtained by integrating

out of the joint probability density as

follows:

Thus,

the marginal probability density function of

is

The

expected value of

is

The

expected value of

is

The

variance of

is

The

standard deviation of

is

The

support of

is

When

,

the marginal probability density function of

is

,

while, when

,

the marginal probability density function of

can be obtained by integrating

out of the joint probability density as

follows:

We

do not explicitly compute the integral, but we write the marginal probability

density function of

as

follows:

The

expected value of

is

![[eq90]](/images/linear-correlation__206.png) The

expected value of

The

expected value of

is

![[eq91]](/images/linear-correlation__208.png) The

variance of

The

variance of

is

The

standard deviation of

is

The

expected value of the product

can be computed by using the transformation

theorem:

![[eq94]](/images/linear-correlation__214.png) Hence,

by the covariance formula, the covariance between

Hence,

by the covariance formula, the covariance between

and

is

and

the coefficient of linear correlation between

and

is

Please cite as:

Taboga, Marco (2021). "Linear correlation", Lectures on probability theory and mathematical statistics. Kindle Direct Publishing. Online appendix. https://www.statlect.com/fundamentals-of-probability/linear-correlation.

Most of the learning materials found on this website are now available in a traditional textbook format.