In this lecture, we explain how to derive the maximum likelihood estimator (MLE) of the parameter of a Poisson distribution.

Before reading this lecture, you might want to revise the pages on:

We observe

independent draws from a Poisson distribution.

In other words, there are

independent Poisson random

variables

and

we observe their

realizations

The probability mass

function of a single draw

is

![[eq3]](/images/Poisson-distribution-maximum-likelihood__6.png) where:

where:

is the parameter of interest (for which we want to derive the MLE);

the support of the

distribution is the set of non-negative integer

numbers:

is the factorial of

.

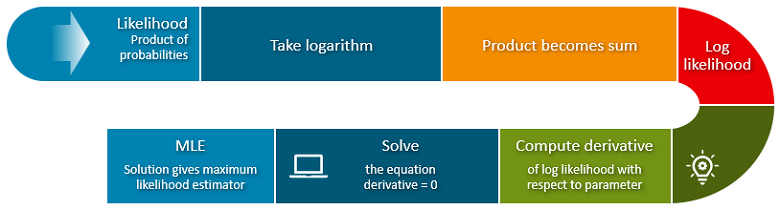

The likelihood function

is

The

observations are independent. As a consequence, the

likelihood function is equal to the product of their probability mass

functions:

Furthermore,

the observed values

necessarily belong to the support

.

So, we

have

The log-likelihood function is

![[eq9]](/images/Poisson-distribution-maximum-likelihood__17.png)

By taking the natural logarithm of the

likelihood function derived above, we get the

log-likelihood:![[eq10]](/images/Poisson-distribution-maximum-likelihood__18.png)

The maximum likelihood estimator of

is

The MLE is the solution of the following

maximization problem

The

first order condition for a maximum is

The

first derivative of the log-likelihood with respect to the parameter

is

![[eq14]](/images/Poisson-distribution-maximum-likelihood__24.png) Impose

that the first derivative be equal to zero, and

get

Impose

that the first derivative be equal to zero, and

get

Therefore, the estimator

is just the sample mean of the

observations in the sample.

This makes intuitive sense because the expected value of a Poisson random

variable is equal to its parameter

,

and the sample mean is an unbiased

estimator of the

expected value.

The estimator

is asymptotically normal with asymptotic mean equal to

and asymptotic variance equal

to

The score

is![[eq19]](/images/Poisson-distribution-maximum-likelihood__32.png) The

Hessian

is

The

Hessian

is![[eq20]](/images/Poisson-distribution-maximum-likelihood__33.png) The

information equality implies

that

The

information equality implies

that![[eq21]](/images/Poisson-distribution-maximum-likelihood__34.png) where

we have used the fact that the expected value of a Poisson random variable

with parameter

where

we have used the fact that the expected value of a Poisson random variable

with parameter

is equal to

.

Finally, the asymptotic variance

is

![[eq22]](/images/Poisson-distribution-maximum-likelihood__37.png)

Thus, the distribution of the maximum likelihood estimator

can be approximated by a normal distribution with mean

and variance

.

On StatLect you can find detailed derivations of MLEs for numerous other distributions and statistical models.

| Type | Solution | |

|---|---|---|

| Exponential distribution | Univariate distribution | Analytical |

| Normal distribution | Univariate distribution | Analytical |

| T distribution | Univariate distribution | Numerical |

| Multivariate normal distribution | Multivariate distribution | Analytical |

| Normal linear regression model | Regression model | Analytical |

| Logistic classification model | Classification model | Numerical |

| Probit classification model | Classification model | Numerical |

| Gaussian mixture | Mixture of distributions | Numerical (EM) |

Please cite as:

Taboga, Marco (2021). "Poisson distribution - Maximum Likelihood Estimation", Lectures on probability theory and mathematical statistics. Kindle Direct Publishing. Online appendix. https://www.statlect.com/fundamentals-of-statistics/Poisson-distribution-maximum-likelihood.

Most of the learning materials found on this website are now available in a traditional textbook format.